Scientists develop non-invasive AI system that may "decode" the human brain

May 2 Update: Scientists have developed a noninvasive artificial intelligence (AI) system that focuses on translating human brain activity into a sequence of text, according to a peer-reviewed study published Monday in the journal Nature Neuroscience.

Dr. Huss and other scientists prepare to collect brain activity data

The system, called a semantic decoder, may eventually benefit patients who have lost the ability to physically communicate due to stroke, paralysis or other degenerative diseases. Previously, researchers have developed language decoding methods that allow people who have lost the ability to speak to try to speak, and allow paralyzed people to write when they want to. But the new semantic decoder is one of the first decoders that does not rely on brain implants. In this study, it was able to translate a person's imagined language into actual speech and could produce a relatively accurate description of what was happening on the screen while watching a silent movie.

Researchers at the University of Texas at Austin developed the system in part using a "Transformer" model, similar to the language models that underpin the OpenAI chatbot ChatGPT and the Google chatbot Bard. Participants in the study trained the decoder by listening to a podcast for several hours on a functional magnetic resonance imaging (fMRI) scanner, a large machine that measures brain activity.

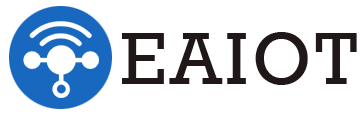

Scientists train models to create mappings between brain activity and semantic features

The scientists recorded MRI data from three participants as they listened to 16 hours of narrative stories to train the model to create a mapping between brain activity and semantic features, capturing the meaning of certain phrases and the associated brain responses.

"It's not just a verbal stimulus," said Alexander Huth, a neuroscientist at the University of Texas who helped lead the study, "we're hearing the meaning, some idea about what's going on. The fact that it could happen is very exciting."

Once the AI system is trained, it can generate a sequence of text when participants are listening to or imagining the telling of a new story. This generated text is not an exact transcript; the researchers designed it to capture general ideas or perspectives.

According to a press release issued by the University of Texas at Austin, the text generated by the trained AI system was a close or exact match to what the participants intended to say in their original words about half of the time. For example, when participants heard the phrase "I don't have a driver's license yet" in the experiment, the thought was translated as "She hasn't even started learning to drive yet.

"This is a real leap forward for noninvasive methods, which typically only generate words or short sentences," Huss said in the press release. "We are allowing the model to decode continuous language with complex ideas over a long period of time. "

Participants were also asked to watch four videos in a scanner without audio, and the AI system was able to accurately describe "certain events" in them, the press release said.

Limitations

However, Dr. Huss and his colleagues note that there are limitations to this approach to language decoding. First, fMRI scanners are bulky and expensive. In addition, training the model is a long and tedious process that must be performed on individuals in order to be effective. When the researchers tried to use a decoder trained on one person to read another's brain activity, it failed, suggesting that each brain has a unique way of expressing meaning.

As of Monday, the decoder could not be used outside of a laboratory setting because it relies on an fMRI scanner. But the researchers believe it could eventually be used with a more portable brain imaging system. The study's principal investigators have applied for a Patent Cooperation Treaty (PCT) patent for the technology.

Also, participants were able to block out their internal monologues and get rid of the decoder by thinking about other things. the AI may be able to read human thoughts, but for now it can only do so one at a time, with human permission.

Related Article

-

OpenAI CEO debuts new AI healthcare company: largely inspired by ChatGPT visits to the doctor

-

"Apple's replacement wave is underestimated"! Damo expects more than 500 million iPhone shipments in

-

Current Development Status of Intelligent Driving

-

Main application directions of big models

-

The first "laborers" whose jobs were taken by AI have already appeared

-

With the integration of ChatGPT, this in-car AI voice assistant has "captured" many European countri