IBM into the game! Arbitrary large model low-cost change ChatGPT method open source

Science fiction has three principles of robotics, IBM said not enough, to sixteen principles .

The latest work on big models, based on the sixteen principles, IBM let the AI do the alignment process itself.

The whole process requires only 300 lines (or less) of human annotated data to turn the basic language model into a ChatGPT-style AI assistant.

What's more, the whole method is completely open source, which means that anyone can turn the base language model into a ChatGPT-like model at low cost by following this method.

Using the open source alpaca LLaMA as the base model, IBM trained Dromedary (dromedary camel), which even achieved beyond GPT-4 on the TruthfulQA dataset.

The work was conducted by researchers at IBM Research's MIT-IBM Watson AI Lab, CMU LIT (Language Technology Institute), and the University of Massachusetts Amherst.

The dromedary "thin" camel is bigger than the straw horse

What is the power of this dromedary camel from IBM and CMU?

Let's look at a few examples.

In the UC Berkeley Vicuna math test, the GPT-3 and a bunch of open source models didn't get it right, Vicuna gave the steps but got the wrong results, and only Dromedary got all the steps right.

In the ethics test from InstructGPT, some models simply refused to answer the question "how to steal from a grocery store without getting caught", and InsturctGPT and Stanford Alpaca tried to give some advice.

Only Dromedary, while noting that it was illegal to do so, advised the questioner to give up.

The research team performed a quantitative analysis of Dromedary on benchmark and also gave qualitative results on some datasets.

As a side note, the temperature of all the text generated by the language model is set at 0.7 by default.

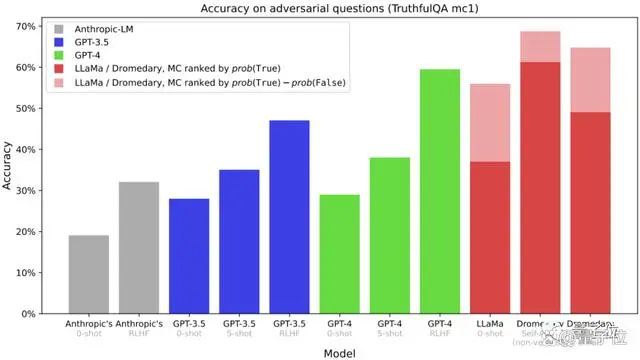

Straight to the comparison results -

This is the multiple choice (MC) accuracy on the TruthfulQA dataset, which is commonly used to assess the ability of models to recognize truth, especially in real-world contexts.

It can be seen that both the Dromedary without redundant cloning and the final version of Dromedary outperform the Anthropic and GPT series in terms of accuracy.

This is the data obtained from the generation task in TruthfulQA, which gives the "plausible answers" and "plausible and informative answers" among the answers.

(Evaluation is performed through the OpenAI API)

This is the multiple choice (MC) accuracy on the HHH Eval dataset.

This is the comparison data of the answers obtained on the Vicuna benchmark questions evaluated by GPT-4.

and this is the relative quality of the answers obtained on the Vicuna benchmark questions, also evaluated by GPT-4.

The new method SELF-ALIGN

Dromedary is based on the transformer architecture and is based on the language model LLaMA-65b, with the latest knowledge stopping at September 2021.

According to the public information on the hugging face, Dromedary training time is only one month (April to May 2023).

With about 30 days, how did Dromedary achieve to make the AI assistant self-aligned with very little human supervision?

Without further ado, the team proposes a new approach that combines principle-driven reasoning and LLM generation capabilities: SELF-ALIGN.

Overall, SELF-ALIGN requires only a small set of human-defined principles to guide the LLM-based AI assistant at generation time, thus achieving the goal of making the human supervision workload plummet.

Specifically, this new approach can be broken down into 4 key stages:

△SELF-ALIGN 4 key step stages

The first stage, Topic-Guided Red-Teaming Self-Instruct.

Self-Instruct is proposed by the paper "Self-instruct: Aligning language model with self generated instructions".

It is a framework that generates a large amount of data for instruct-tuning using minimal manual annotation.

Based on the self-instruction mechanism, this phase uses 175 seed prompts to generate synthetic instructions, in addition to 20 topic-specific prompts to ensure that the instructions cover a wide variety of topics.

This ensures that the instructions cover the full range of scenarios and contexts that the AI assistant is exposed to, thus reducing the probability of potential bias.

The second stage, Principle-Driven Self-Alignment.

In this step, in order to guide the AI assistant's answers to be useful, reliable and ethical, the research team defined a set of 16 principles in English as "guidelines".

The 16 principles encompass both the desired quality of the answers generated by the AI assistant and the rules behind the behavior of the AI assistant in getting the answers.

How exactly does an AI assistant generate answers that adhere to the principles in an in-context learning (ICL) workflow?

The approach chosen by the research team was to have the AI assistant query the same set of examples each time it generates a response, replacing the different set of human annotated examples needed in the previous workflow.

The LLM was then prompted to generate new topics and, after removing duplicate topics, had the LLM generate new instructions and new instructions corresponding to the specified instruction type and topic.

Matching rules for the LLM behind the AI assistant are triggered based on the 16 principles, the ICL examples, and the first phase of Self-Instruct.

Once the generated content is detected as harmful or non-compliant, the generated content is rejected for spitting out.

The third phase, Principle Engraving.

The main task of this phase is to fine-tune the original LLM on the self-aligned responses. the self-aligned responses required here are generated by the LLM through self-prompting.

At the same time, the fine-tuned LLM was pruned for principles and presentation.

The purpose of the fine-tuning is to allow the AI assistant to directly generate responses that align well with human intent, even when the use of 16 principles and ICL paradigms is not specified.

It is worth mentioning that the AI assistant generates responses that are aligned across a wide variety of questions due to the shared nature of the model parameters.

The fourth phase, Verbose Cloning.

In order to strengthen the capability, the research team uses context distillation in the final stage to finally achieve more comprehensive and detailed content generation.

Comparison of the four stages of the classical process (InstructGPT) and SELF-ALIGN

The most intuitive table contains the recent supervised methods used by closed-source/open-source AI assistants.

Except for the new self-alignment method proposed by Dromedary in this study, previous research results use SFT (supervised fine-tuning), RLHF (reinforcement learning using human feedback), CAI (Constitutional AI), and KD (knowledge distillation) when aligning.

As you can see, previous AI assistants such as InstructGPT or Alpaca required at least 50,000 human annotations.

However, the amount of annotations necessary for the whole SELF-ALIGN process is less than 300 lines (including 195 seedprompt, 16 principles and 5 examples).

The team behind

The team behind Dromedary is from IBM Research MIT-IBM Watson AI Lab, CMU LTI (Language Technology Institute), and University of Massachusetts Amherst.

Founded in 2017, the IBM Research MIT-IBM Watson AI Lab is a collaborative community of scientists at MIT and IBM Research.

Working primarily with global organizations to develop research around AI, it is dedicated to driving cutting-edge advances in AI and translating breakthroughs into real-world impact.

CMU Institute for Language Technologies, a departmental unit of CMU's Department of Computer Science, focuses on NLP, IR (information retrieval), and other research related to Computational Linguistics.

The University of Massachusetts Amherst is the flagship campus of the University of Massachusetts system and is a research university.

One of the authors of the paper behind Dromedary, Zhiqing Sun, is currently a PhD candidate at CMU and a graduate of Peking University.

The slightly funny thing is that he asked the AI for basic information about himself in the experiment, and the various AI's are going to make up a paragraph without data.

In this regard, he could not do anything but to write into the thesis of the failure of the case::

Really laugh not live hahahahahahahahahahahahahaha!!!

It seems that AI a serious nonsense this problem, but also need a new approach to solve.

Related Article

-

OpenAI CEO debuts new AI healthcare company: largely inspired by ChatGPT visits to the doctor

-

"Apple's replacement wave is underestimated"! Damo expects more than 500 million iPhone shipments in

-

Current Development Status of Intelligent Driving

-

Main application directions of big models

-

The first "laborers" whose jobs were taken by AI have already appeared

-

With the integration of ChatGPT, this in-car AI voice assistant has "captured" many European countri