Lightning diplomacy" in the UK, London to set up AI global regulatory center

Artificial intelligence (AI) is evolving much faster than expected. There is growing concern that if it gets out of hand, this technology could pose catastrophic risks to humanity. At the same time, countries are scrambling to figure out regulatory standards and scales, hoping to regulate development without compromising their innovation.

The British government has taken the lead in a round of "lightning diplomacy". The Times reported on June 3 that the government is considering setting up an international AI regulator in London, modeled on the International Atomic Energy Agency (IAEA). Prime Minister Sunac hopes to use this to make Britain a global AI center, promising to play a "leading role" in developing "safe and secure" rules.

On June 7-8, Sunnucks will lead a delegation of political and business representatives to the United States. He will seek the support of U.S. President Joe Biden to convene a summit in London this fall to discuss with top governments and multinational companies the development of international rules for AI and the establishment of a global body similar to the IAEA. The IAEA, founded in 1957, has 176 signatories and is headquartered in Vienna, Austria, and is dedicated to promoting the peaceful use of nuclear energy.

Also according to the Daily Telegraph, Chloe Smith, the British Minister for Science, Innovation and Technology, will meet with foreign counterparts on AI-related topics at the OECD Technology Forum in Paris on June 6. The event is funded by the British government and invited countries include the United States, Japan, South Korea, Israel, Australia, New Zealand, Brazil, Chile, Norway, Turkey, Ukraine and Senegal.

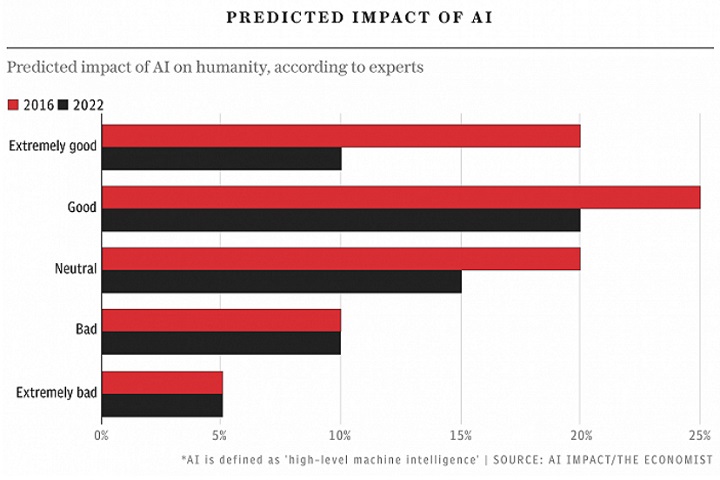

According to a joint survey conducted by The Economist, experts' expectations regarding the impact of AI on humans have tended to be negative over the past six years.

UK government sources told The Guardian that the hope is that an approach built on basic principles is more likely to gain widespread support than the earlier EU position of choosing to ban some personal AI products, such as facial recognition software, in helping to coordinate the different regulatory efforts of various countries.

In 2021, the EU published strict regulations on regulating AI applications, including restrictions on the use of facial recognition software by police in public places. In March, the AI-focused nonprofit Future of Life Institute released an open letter signed by more than 1,000 technologists and researchers calling for a six-month moratorium on the development of large-scale AI models, which should continue only when the effects of a robust AI system are assured to be positive and the risks manageable.

China is not far behind. Back on April 11, the State Internet Information Office publicly solicited comments on the "Management Measures for Generative AI Services," which set the bottom line for the industry in terms of clear conditions and requirements, delineation of responsible subjects, formation of problem handling mechanisms, and clarity of legal responsibilities.

In May 2023, the European Union introduced a proposal for an Artificial Intelligence Act requiring companies developing generative AI such as ChatGPT to disclose any copyrighted material used to train their models. on May 24, the U.S. White House Office of Science and Technology Policy released a questionnaire for the public to inform and inform a comprehensive national regulatory strategy under development.

On May 30, the Center for Artificial Intelligence Security, a multinational nonprofit organization, released a joint open letter - Mitigating the risk of human extinction from AI should be a global priority, along with other societal-scale risks such as pandemics and nuclear war. The open letter was initially signed by more than 350 executives, researchers and engineers working on AI in various countries.

The very next day, Sunac met with the heads of OpenAI, Google DeepMind and Anthropic, among others, to say that they would work together to ensure that society benefits from AI technologies and to discuss the risks posed by AI, involving disinformation, national security and existential threats.

Commenting on the open letter from the aforementioned experts, Sunac said of reports that people would be concerned about AI posing existential risks similar to pandemics or nuclear war, "I hope they are reassured that the (U.K.) government is looking very carefully at this subject."

As the capital of an old developed country, London has the advantages of political stability, a sound legal system, a rich talent pool and a dominant academic language. Especially in terms of industry clustering effect, the London City Council has been vigorously promoting the East London Tech City (Tech City) project since 2011, which is the first choice for many high-tech giants to set up European, or even global business headquarters, including TikTok, Snapchat, Meta, Google and Amazon.

In an interview with The Times, a senior official showed full confidence, saying the UK is "a true tech superpower that should show global leadership and build an alliance rooted in shared values, as well as secure international funding to support this work."

*** Translated with www.DeepL.com/Translator (free version) ***